Author

Tsung-Hsing Ho

Abstract

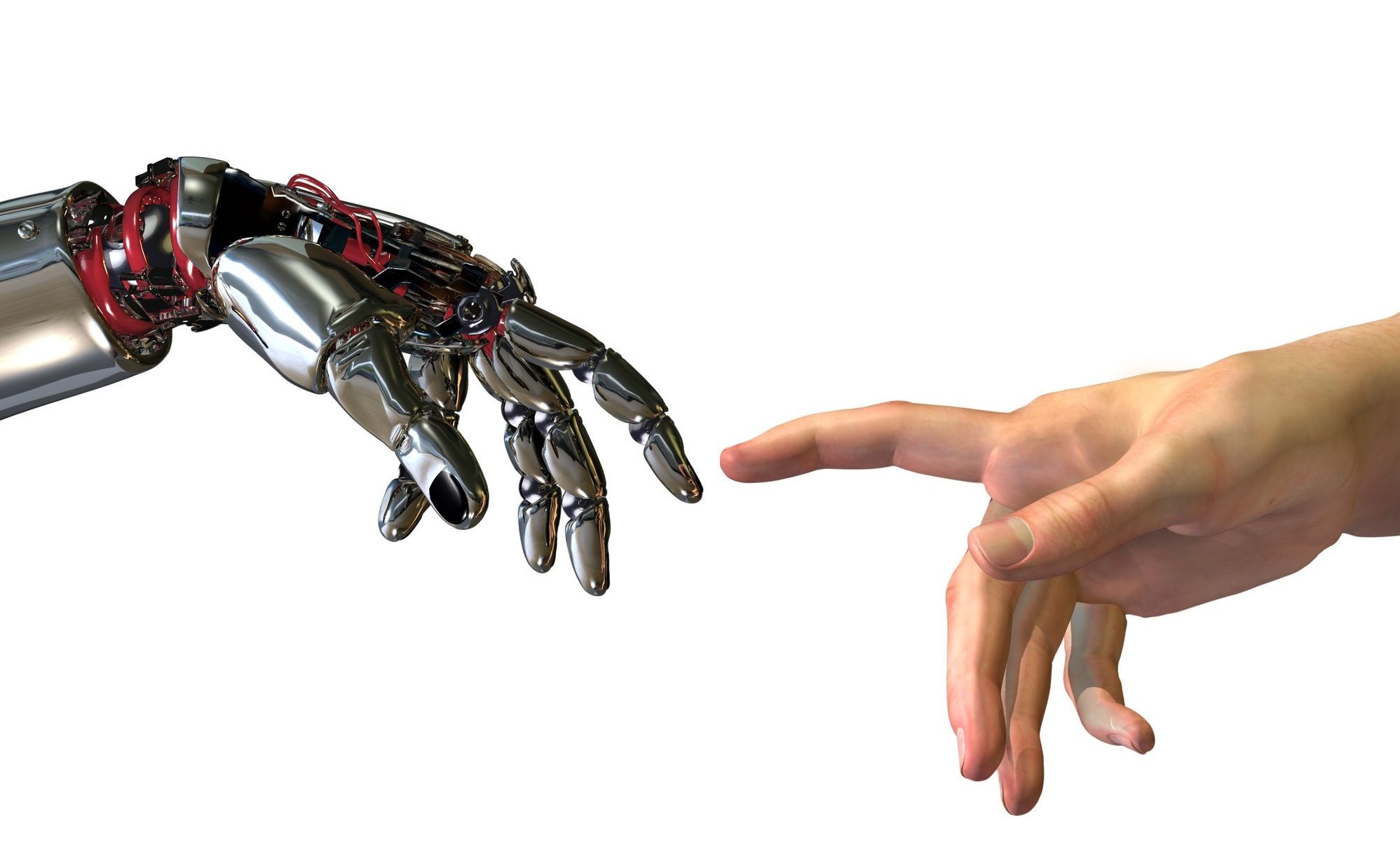

With the advance of AI technology, it seems that robots might take over decision making from humans in many aspects of daily life. For humans to accept it, nevertheless, the decisions robots make must be morally acceptable for humans. A natural thought is that robots should be taught how to apply moral reasoning as humans do. For example, the Moral Machine project by MIT attempts to assimilate robots’ moral reasoning into humans’. In other words, robots should act as moral agents like us. Call it the anthropomorphic view. I oppose the anthropomorphic view. Based on the insight from P. F. Strawson’s view about reactive attitudes, I argue that it is morally wrong and psychologically unacceptable for robots to interfere with our autonomy.

Bio

Tsung-Hsing Ho‘s main research interests include epistemic normativity, the normativity of mental attitudes, and the fitting-attitude account of value. He is an assistant professor of the department of philosophy at National Chung Cheng University (Taiwan).